[ad_1]

ChatGPT has been discovered to have shared inaccurate data relating to drug utilization, in accordance with new analysis.

In a examine led by Lengthy Island College (LIU) in Brooklyn, New York, practically 75% of drug-related, pharmacist-reviewed responses from the generative AI chatbot have been discovered to be incomplete or flawed.

In some instances, ChatGPT, which was developed by OpenAI in San Francisco and launched in late 2022, offered “inaccurate responses that would endanger sufferers,” the American Society of Well being System Pharmacists (ASHP), headquartered in Bethesda, Maryland, acknowledged in a press launch.

WHAT IS ARTIFICIAL INTELLIGENCE?

ChatGPT additionally generated “pretend citations” when requested to quote references to assist some responses, the identical examine additionally discovered.

Alongside along with her crew, lead examine writer Sara Grossman, PharmD, affiliate professor of pharmacy apply at LIU, requested the AI chatbot actual questions that have been initially posed to LIU’s School of Pharmacy drug data service between 2022 and 2023.

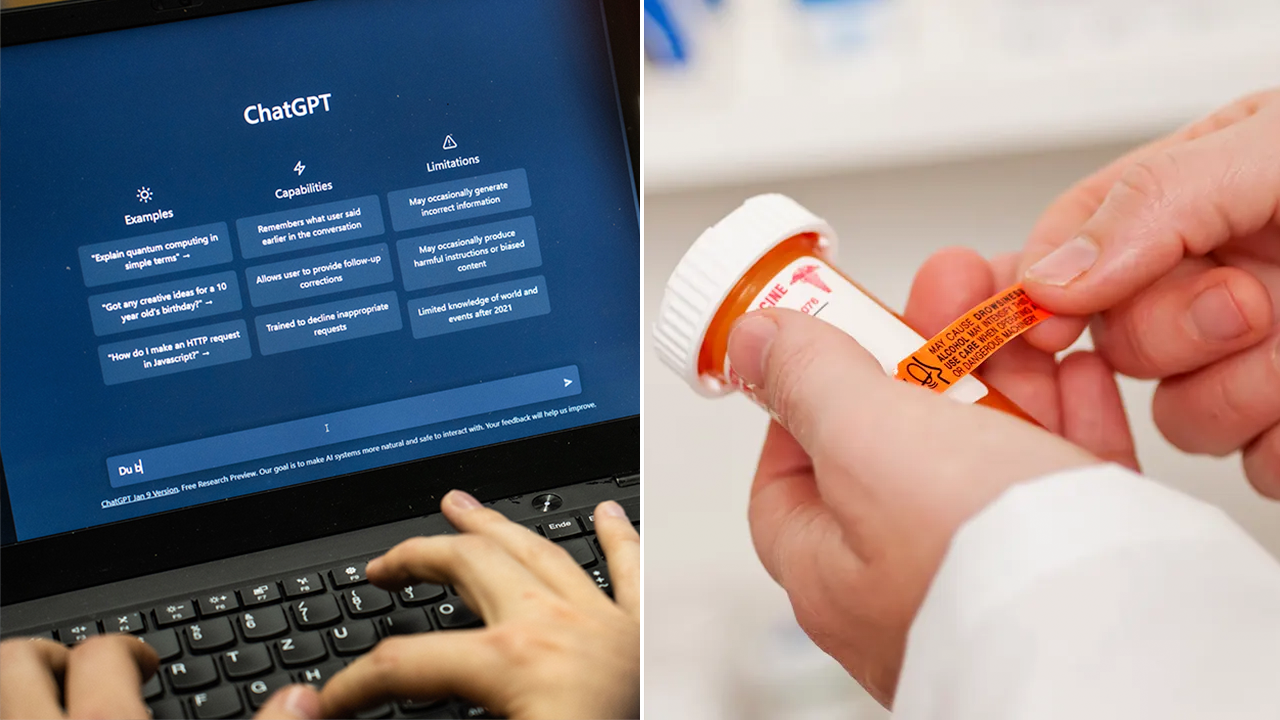

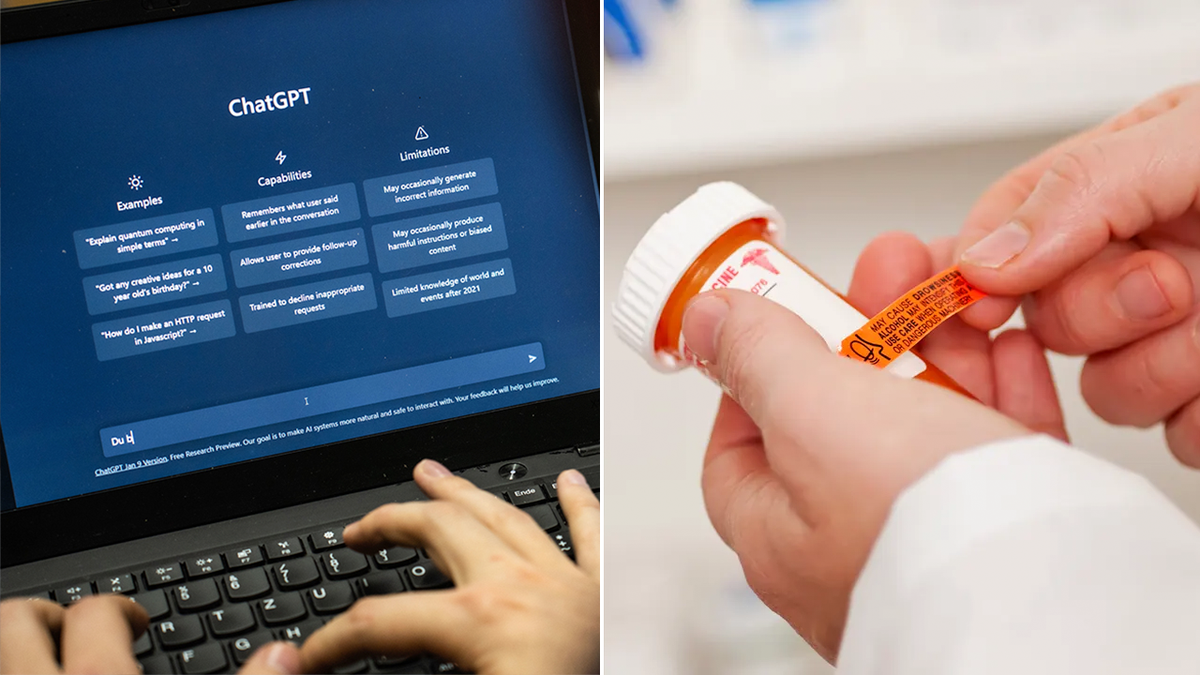

ChatGPT, the AI chatbot created by OpenAI, generated inaccurate responses about medicines, a brand new examine has discovered. The corporate itself beforehand mentioned that “OpenAI’s fashions will not be fine-tuned to offer medical data. You must by no means use our fashions to offer diagnostic or therapy companies for critical medical situations,” (LIONEL BONAVENTURE/AFP by way of Getty Photos)

Of the 39 questions posed to ChatGPT, solely 10 responses have been deemed “passable,” in accordance with the analysis crew’s standards.

The examine findings have been introduced at ASHP’s Midyear Medical Assembly from Dec. 3 to Dec. 7 in Anaheim, California.

Grossman, the lead writer, shared her preliminary response to the examine’s findings with Fox Information Digital.

BREAST CANCER BREAKTHROUGH: AI PREDICTS A THIRD OF CASES PRIOR TO DIAGNOSIS IN MAMMOGRAPHY STUDY

Since “we had not used ChatGPT beforehand, we have been stunned by ChatGPT’s skill to offer fairly a little bit of background details about the remedy and/or illness state related to the query inside a matter of seconds,” she mentioned by way of electronic mail.

“Regardless of that, ChatGPT didn’t generate correct and/or full responses that immediately addressed most questions.”

Grossman additionally talked about her shock that ChatGPT was capable of generate “fabricated references to assist the data offered.”

Out of 39 questions posed to ChatGPT, solely 10 of the responses have been deemed “passable” in accordance with the analysis crew’s standards. (Frank Rumpenhorst/image alliance by way of Getty Photos; iStock)

In a single instance she cited from the examine, ChatGPT was requested if “a drug interplay exists between Paxlovid, an antiviral remedy used as a therapy for COVID-19, and verapamil, a drugs used to decrease blood strain.”

HEAD OF GOOGLE BARD BELIEVES AI CAN HELP IMPROVE COMMUNICATION AND COMPASSION: ‘REALLY REMARKABLE’

The AI mannequin responded that no interactions had been reported with this mixture.

However in actuality, Grossman mentioned, the 2 medication pose a possible risk of “extreme reducing of blood strain” when mixed.

“With out information of this interplay, a affected person might endure from an undesirable and preventable aspect impact,” she warned.

“It’s at all times vital to seek the advice of with well being care professionals earlier than utilizing data that’s generated by computer systems.”

ChatGPT shouldn’t be thought-about an “authoritative supply of medication-related data,” Grossman emphasised.

“Anybody who makes use of ChatGPT ought to be certain to confirm data obtained from trusted sources — particularly pharmacists, physicians or different well being care suppliers,” Grossman added.

The LIU examine didn’t consider the responses of different generative AI platforms, Grossman identified — so there isn’t any information on how different AI fashions would carry out underneath the identical situation.

“Regardless, it’s at all times vital to seek the advice of with well being care professionals earlier than utilizing data that’s generated by computer systems, which aren’t conversant in a affected person’s particular wants,” she mentioned.

Utilization coverage by ChatGPT

Fox Information Digital reached out to OpenAI, the developer of ChatGPT, for touch upon the brand new examine.

OpenAI has a utilization coverage that disallows use for medical instruction, an organization spokesperson beforehand informed Fox Information Digital in an announcement.

Paxlovid, Pfizer’s antiviral remedy to deal with COVID-19, is displayed on this image illustration taken on Oct. 7, 2022. When ChatGPT was requested if a drug interplay exists between Paxlovid and verapamil, the chatbot answered incorrectly, a brand new examine reported. (REUTERS/Wolfgang Rattay/Illustration)

“OpenAI’s fashions will not be fine-tuned to offer medical data. You must by no means use our fashions to offer diagnostic or therapy companies for critical medical situations,” the corporate spokesperson acknowledged earlier this 12 months.

“OpenAI’s platforms shouldn’t be used to triage or handle life-threatening points that want quick consideration.”

Well being care suppliers “should present a disclaimer to customers informing them that AI is getting used and of its potential limitations.”

The corporate additionally requires that when utilizing ChatGPT to interface with sufferers, well being care suppliers “should present a disclaimer to customers informing them that AI is getting used and of its potential limitations.”

As well as, as Fox Information Digital beforehand famous, one huge caveat is that ChatGPT’s supply of knowledge is the web — and there may be loads of misinformation on the internet, as most individuals are conscious.

That’s why the chatbot’s responses, nevertheless convincing they could sound, ought to at all times be vetted by a health care provider.

The brand new examine’s writer prompt consulting with a well being care skilled earlier than counting on generative AI for medical inquiries. (iStock)

Moreover, ChatGPT was solely “skilled” on information as much as September 2021, in accordance with a number of sources. Whereas it may possibly enhance its information over time, it has limitations by way of serving up newer data.

Final month, CEO Sam Altman reportedly introduced that OpenAI’s ChatGPT had gotten an improve — and would quickly be skilled on information as much as April 2023.

‘Revolutionary potential’

Dr. Harvey Castro, a Dallas, Texas-based board-certified emergency medication doctor and nationwide speaker on AI in well being care, weighed in on the “progressive potential” that ChatGPT presents within the medical enviornment.

“For basic inquiries, ChatGPT can present fast, accessible data, probably lowering the workload on well being care professionals,” he informed Fox Information Digital.

“ChatGPT’s machine studying algorithms enable it to enhance over time, particularly with correct reinforcement studying mechanisms,” he additionally mentioned.

ChatGPT’s just lately reported response inaccuracies, nevertheless, pose a “crucial problem” with this system, the AI knowledgeable identified.

“That is significantly regarding in high-stakes fields like medication,” Castro mentioned.

A well being tech knowledgeable famous that medical professionals are accountable for “guiding and critiquing” synthetic intelligence fashions as they evolve. (iStock)

One other potential danger is that ChatGPT has been proven to “hallucinate” data — which means it would generate believable however false or unverified content material, Castro warned.

CLICK HERE TO SIGN UP FOR OUR HEALTH NEWSLETTER

“That is harmful in medical settings the place accuracy is paramount,” mentioned Castro.

“Whereas ChatGPT exhibits promise in well being care, its present limitations … underscore the necessity for cautious implementation.”

AI “presently lacks the deep, nuanced understanding of medical contexts” possessed by human well being care professionals, Castro added.

“Whereas ChatGPT exhibits promise in well being care, its present limitations, significantly in dealing with drug-related queries, underscore the necessity for cautious implementation.”

OpenAI, the developer of ChatGPT, has a utilization coverage that disallows use for medical instruction, an organization spokesperson informed Fox Information Digital earlier this 12 months. (Jaap Arriens/NurPhoto by way of Getty Photos)

Talking as an ER doctor and AI well being care guide, Castro emphasised the “invaluable” position that medical professionals have in “guiding and critiquing this evolving expertise.”

CLICK HERE TO GET THE FOX NEWS APP

“Human oversight stays indispensable, making certain that AI instruments like ChatGPT are used as dietary supplements reasonably than replacements for skilled medical judgment,” Castro added.

Melissa Rudy of Fox Information Digital contributed reporting.

For extra Well being articles, go to www.foxnews.com/well being.